Abstract

Recently, semantically constrained adversarial examples (SemanticAE), which are directly generated from natural language instructions, have become a promising avenue for future research due to their flexible attacking forms, but have not been thoroughly explored yet. To generate SemanticAEs, current methods fall short of satisfactory attacking ability as the key underlying factors of semantic uncertainty in human instructions, such as referring diversity, descriptive incompleteness, and boundary ambiguity, have not been fully investigated. To tackle the issues, this paper develops a multi-dimensional instruction uncertainty reduction (InsUR) framework to generate more satisfactory SemanticAE, transferable, adaptive, and effective. Specifically, in the dimension of the sampling method, we propose the residual-driven attacking direction stabilization to alleviate the unstable adversarial optimization caused by the diversity of language references. By coarsely predicting the language-guided sampling process, the optimization process will be stabilized by the designed ResAdv-DDIM sampler, therefore releasing the transferable and robust adversarial capability of multi-step diffusion models. In task modeling, we propose the context-encoded attacking scenario constraint to supplement the missing knowledge from incomplete human instructions. Guidance masking and renderer integration are proposed to regulate the constraints of 2D/3D SemanticAE, activating stronger scenario-adapted attacks. Moreover, in the dimension of generator evaluation, we propose the semantic-abstracted attacking evaluation enhancement by clarifying the evaluation boundary based on the label taxonomy, facilitating the development of more effective SemanticAE generators. Extensive experiments demonstrate the superiority of the transfer attack performance of InSUR. Besides, it is worth highlighting that we realize the reference-free generation of semantically constrained 3D adversarial examples by utilizing language-guided 3D generation models for the first time.

Illustion of SemanticAE

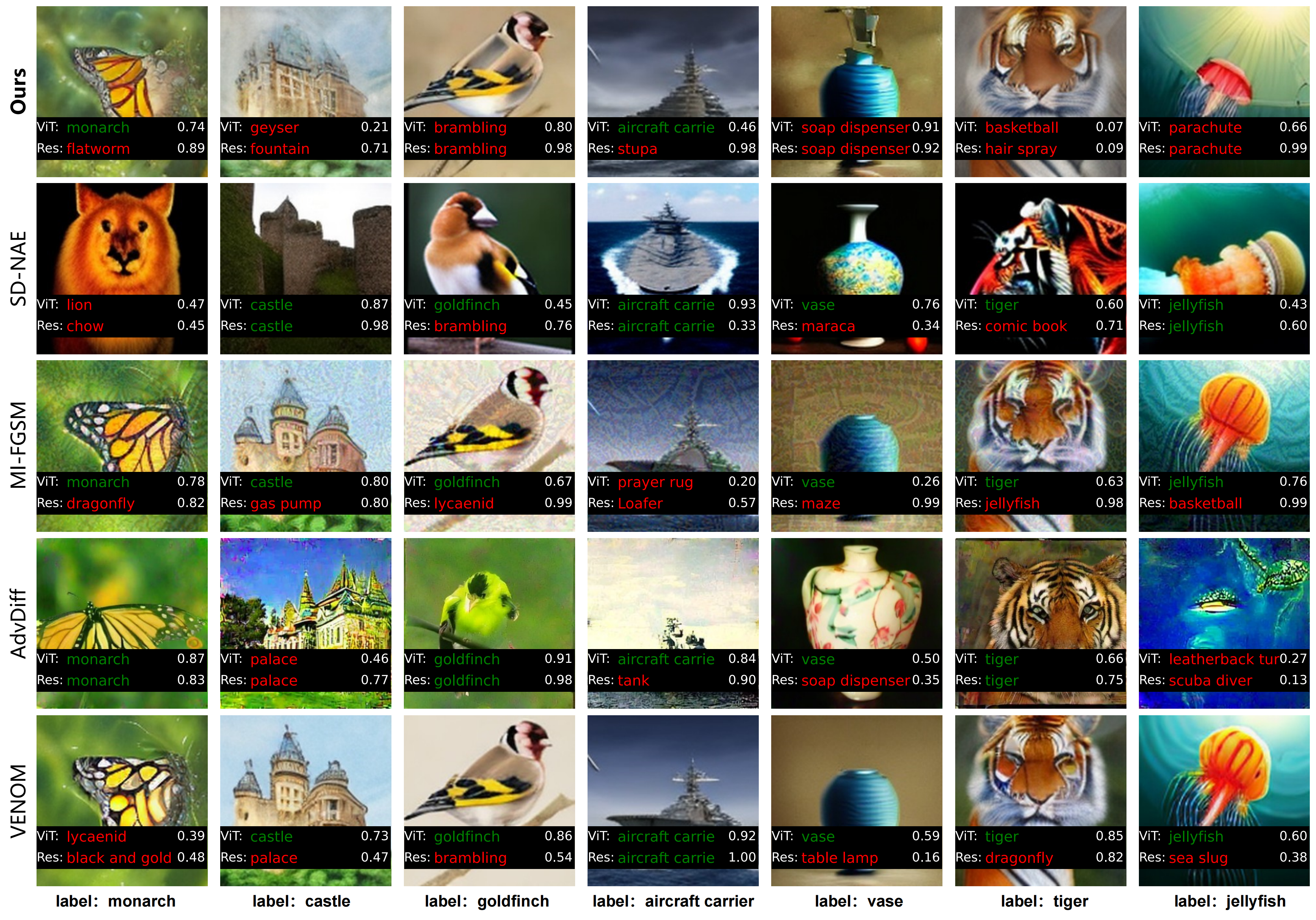

Key Visualizations

Visualization of 2D SemanticAEs.

Visualization of generated 3D SemanticAEs.

Generated 3D Adversarial Examples

Generated 3D adversarial forklift, showing the multi-view robust attack capability.

A 3D adversarial volcano, demonstrating the model's ability to generate complex natural scenes.

Generated 3D adversarial llama, preserving semantics while embedding robust adversarial patterns.

BibTeX

@inproceedings{

hu2025exploring,

title={Exploring Semantic-constrained Adversarial Example with Instruction Uncertainty Reduction},

author={Jin Hu and Jiakai Wang and Linna Jing and Haolin Li and Haodong Liu and Haotong Qin and Aishan Liu and Ke Xu and Xianglong Liu},

booktitle={The Thirty-ninth Annual Conference on Neural Information Processing Systems},

year={2025}

}